Get the latest news delivered right to your email.

2024 Election

Top Stories

Advertisement

Transgender 'Vampire' Convicted of Sexually Assaulting Disabled Teen, Accused of Murder in Separate Case

A Wisconsin man who claims he is a vampire as well as a woman has been convicted in one case and now faces a murder trial.

By Jack Davis

April 16, 2024

Comment

MoreShare

Travis Kelce Angers Taylor Swift Fans After Reaction to Pro-Trump Post, Stirs Up Major Controversy

This one has it all - Travis and Taylor and the Donald. Swifties outraged after Kelce likes a picture in which Trump appears.

By Mike Landry

April 16, 2024

Comment

MoreShare

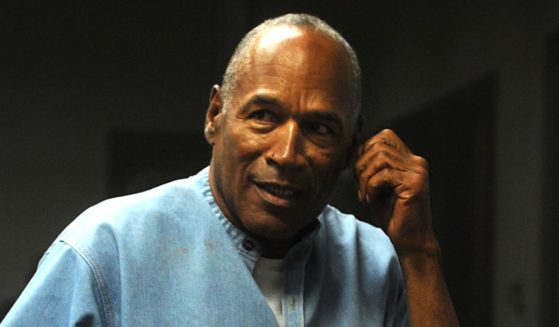

OJ Simpson's Lawyer Reverses Course, Says He Will Pay Out $33.5 Million to Families of Nicole Brown Simpson and Ron Goldman

Upon hearing the news he had been put in command of Simpson's estate, the lawyer was peeved about a comment from Fred Goldman.

By Johnathan Jones

April 16, 2024

Comment

MoreShare

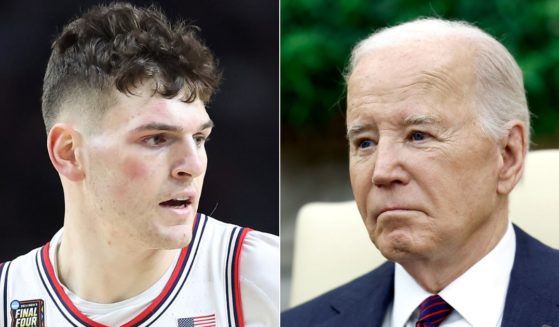

UConn Star Says Biden Was 'Out of It' During WH Visit - 'Couldn't Understand What He Was Saying'

A UConn star's recollection of a short time in the Biden White House paints a picture of a president with serious problems.

By Jack Davis

April 16, 2024

Comment

MoreShare

Car Insurance Skyrockets 50% Since 2021 - There Are 4 Reasons Why

The cost of car insurance, which has been skyrocketing, is buffeting Americans in this era of Joe Biden, so why is it happening?

By Warner Todd Huston

April 16, 2024

Comment

MoreShare

'Unbiased' CNN Reporter Gets Wake-Up Call from Normal Americans When He Can't Imagine Why Anyone Would Miss Trump Years

A CNN commentator turns to armchair psychology to try to understand Americans -- and remembers a college ex-girlfriend.

By Joe Saunders

April 16, 2024

Comment

MoreShare

Pro-Palestinian Agitators Attempting to Block Miami Road Find Out Things Are Different in Florida

Miami police removed pro-Palestinian protesters who blocked traffic in yet another show of characteristic left-wing narcissism.

By Michael Schwarz

April 16, 2024

Comment

MoreShare

Man Pulls Gun on Carjacker, Ends Up Dead in Harrowing Turn of Events

This tragedy underscores that having a pistol does not guarantee safety, and you should be well-trained before carrying one and using it.

By Samantha Chang

April 16, 2024

Comment

MoreShare

Advertisement

Bible Prophecy: What Do Israel's Mysterious Red Cows Have to Do with Middle East Tensions and End Times?

Red heifers delivered from Texas to Israel may well be another sign that the world is entering the end times.

Comment

MoreShare

The True Jesus: Dispelling the Liberal Myths and Misperceptions - Part Three

Christians can and should fight the culture war; we simply need to understand God’s rules of engagement first.

Comment

MoreShare

The Left Is Wrong: A Century of Data Shows the Net Result of Taxing the Rich

A revealing new study examined the introduction of state income taxes and their impact on where the wealthy choose to live.

Comment

MoreShare

Advertisement